Post-PMF startups face a testing paradox. You need automation to handle the expanding regression surface, but traditional tools break every time your frontend changes. Manual QA can't keep up, yet most scale QA automation solutions demand so much engineering time that they defeat the purpose. 89% of organizations are now piloting or deploying generative-AI augmented QE workflows, signaling a massive shift toward AI-first quality engineering. The result is teams either shipping with crossed fingers or hiring dedicated automation engineers just to maintain brittle test suites. There's a better path forward.

TLDR:

- Post-PMF startups need testing tools that handle rapid UI changes without breaking on every deploy.

- Vision-based testing using coordinates avoids brittle DOM selectors that fail when class names shift.

- Self-healing AI agents adapt to UI updates automatically, eliminating manual test maintenance overhead.

- Docket uses coordinate-based AI agents to test web apps like humans do, catching bugs before users hit them.

What Are Post-PMF Testing Tools?

Post-PMF testing tools bridge the gap between ad-hoc manual checks and fully automated engineering workflows. Once a company finds product-market fit, the cost of bugs spikes. Software testing typically consumes 20-40% of development budgets, making it a critical cost center for scaling startups.

Churn affects revenue, and breaking core flows is no longer acceptable. These tools manage the expanding regression surface area where manual oversight fails.

Effective startup testing tools prioritize:

- Critical Path Focus: Automating high-value flows like sign-ups and payments rather than striving for 100% coverage immediately.

- Resilience: Handling frequent UI updates without brittle script failures common in legacy enterprise suites.

- Pipeline Integration: Running startup quality assurance checks on every commit to catch regressions early.

Implementing QA automation frees developers to ship features, providing stability without sacrificing velocity.

How We Ranked Startup Testing Tools

Teams moving past product-market fit require startup testing tools that avoid creating technical debt. The evaluation weighs engineering velocity against robust startup quality assurance.

- Implementation Speed: Solutions must deliver value within hours. Post-PMF teams cannot halt development to construct complex frameworks.

- Maintenance Overhead: Self-healing capabilities take priority. If a UI update breaks the test suite, the software adds friction.

- Accessibility: Because many testers lack scripting comfort, low-code interfaces permit product managers to contribute without blocking engineers.

- Scalability: CI/CD integration makes scale QA automation automatic on every deploy, catching regressions immediately.

Cost analysis compares pricing against the expense of hiring dedicated engineers. Tools offering high coverage without linear headcount growth score highest.

Best Overall Startup Testing Tool: Docket

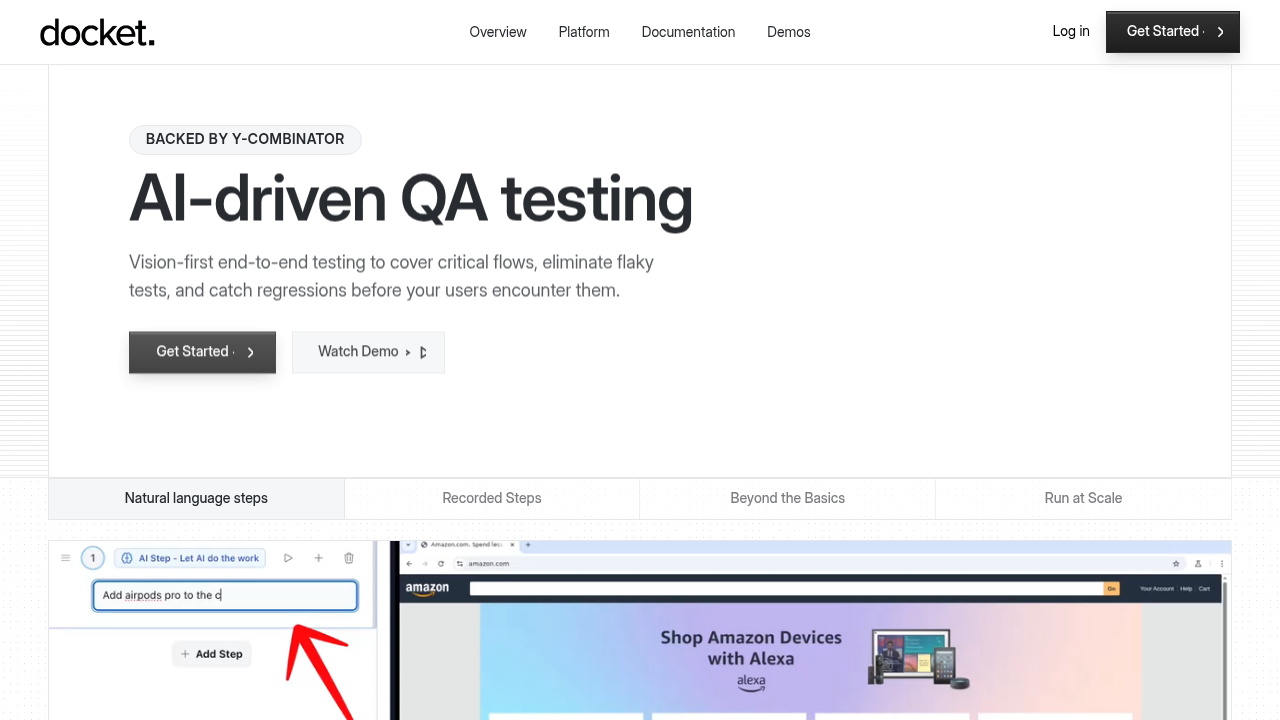

Docket targets the needs of post-PMF startups requiring scale QA automation without headcount expansion. The architecture uses a vision-first, coordinate-based approach that removes the brittleness of DOM selectors. Rather than relying on code identifiers that break with frontend updates, Docket agents interact with applications visually. This methodology keeps post-PMF testing stable during rapid iteration.

What They Offer

Docket uses self-healing logic to adapt to UI changes automatically. If a button moves, the test adjusts. This coordinate-based logic works on complex canvas-based UIs that standard tools miss.

- Natural Language Creation: Build tests using plain English or by recording steps, allowing non-engineers to contribute.

- Visual Reporting: Receive structured reports with screenshots, console logs, and replay capabilities to debug faster.

- Deep Integrations: Connect with Slack, Linear, Jira, and CI/CD pipelines for instant feedback loops.

- Exploratory Testing: Agents go beyond scripted paths to identify edge cases.

Good for: Docket has AI agents which can build your initial test suites for you based on provided context, moving teams from manual QA to automated reliability. For high-growth companies, this removes the trade-off between velocity and startup quality assurance.

Limitation: Docket’s coordinate-based approach excels on visual interfaces but may require fine-tuning for highly dynamic or data-heavy back‑end workflows that lack stable UI anchors.

Bottom Line: Docket redefines startup QA by combining AI-driven visual recognition with no-code test creation, enabling teams to scale quality assurance effortlessly while keeping pace with product velocity.

QA Wolf

QA Wolf functions as a managed service, combining AI with human engineers to handle end-to-end testing. They aim for 80% coverage by outsourcing script management so teams avoid internal maintenance.

What They Offer

- Managed test creation via Playwright.

- 24/7 maintenance with human verification.

- Parallel cloud execution.

- Bug reports sent directly to issue trackers.

Good for: Teams with the budget to outsource startup quality assurance entirely.

Limitation: The service model creates vendor lock-in. Teams lose direct control over scale QA automation, relying on external engineers for updates rather than iterating independently.

Bottom line: QA Wolf fits companies seeking a hands-off approach. Engineering teams prioritizing velocity and control often prefer Docket's autonomous model to avoid service contracts.

testRigor

testRigor uses generative AI to enable plain English test authoring, interpreting user intent to remove coding barriers.

What They Offer

- Plain English creation without code

- Self-healing tests adapting to UI changes

- Support for web, mobile, desktop, and API

- AI generation from specifications

Good for: Non-technical teams requiring quick test creation via natural language.

Limitation: The tool relies on text commands rather than vision-based coordinates. It lacks visual cognition to "see" the interface. Tests fail if context shifts confuse the parser. Desktop capabilities often require higher-tier plans.

Bottom line: testRigor lowers coding hurdles. Conversely, Docket uses a vision-first model for scale QA automation, mimicking human visual interaction to maintain resilience even when text descriptions or DOM structures change.

Mabl

Mabl provides a low-code solution for end-to-end validation. It applies machine learning to test maintenance, reducing suite management overhead.

What They Offer

- Low-code test creation via a browser extension recorder

- AI-powered auto-healing to track UI changes

- Unified coverage for web, mobile, API, and accessibility

- Generative AI assertions for AI-driven output validation

Good for: QA teams seeking a unified, low-code environment to automate browser, API, and accessibility testing without deep scripting expertise.

Limitation: Because Mabl depends on DOM selectors, test stability can degrade on canvas-based or rapidly changing UIs, and its opaque pricing model may hinder cost forecasting for scaling teams.

Bottom Line: Mabl streamlines multi-surface testing for teams prioritizing simplicity and integration, though organizations with high UI variability may find greater long-term resilience in coordinate-based testing solutions like Docket.

Tricentis

Tricentis layers AI capabilities onto model-based automation for enterprise environments. The system relies on DOM object recognition rather than a vision-first architecture, favoring legacy stability over adaptability for rapidly changing interfaces.

What They Offer

- Enterprise management: Supports compliance and execution tracking for regulated industries.

- Model-based automation: Builds workflows using business modules instead of scripts.

- Legacy integration: Specialized support for SAP and Oracle stacks.

- Risk analysis: Prioritizes test execution based on business impact data.

Good for: Large organizations managing heavy legacy dependencies like SAP.

Limitation: High licensing costs and implementation overhead block lean teams. The AI features sit on a DOM-based foundation, meaning brittleness remains when selectors shift.

Bottom line: Tricentis fits regulated enterprise contexts but lacks the speed for post-PMF iteration. Docket uses vision-based agents to bypass the maintenance overhead inherent in these legacy suites.

Feature Comparison Table of Testing Tools

The table below contrasts Docket’s vision-first approach against these legacy models. By using visual data rather than code identifiers, Docket eliminates the maintenance burden inherent to selector-based tools like Mabl or human-gated workflows like QA Wolf. This structural shift allows autonomous agents to adapt to UI changes instantly without manual intervention.

Why Docket Is the Best Testing Tool for Post-PMF Startups

Post-PMF teams often hit a wall where rapid iteration breaks rigid test suites. Most startup testing tools rely on DOM selectors that fail whenever a developer modifies a class name. This forces engineers to fix broken scripts rather than building features.

Docket takes a different path with vision-based AI agents. The system navigates using x-y coordinates and visual elements rather than code hooks. This resilience supports post-PMF testing; if a button moves but remains visible, the test passes.

By decoupling validation from the DOM, Docket allows scale QA automation to withstand aggressive frontend updates. The agents detect visual intent, providing startup quality assurance that prevents regressions without hiring dedicated automation engineers.

Final Thoughts on Automation for High-Growth Teams

The best post-PMF testing decouples validation from code selectors that break constantly. Vision-based agents see your application the way users do, adapting to changes automatically. Your test suite becomes an asset instead of a maintenance burden.

FAQs

How do I choose the right testing tool for my post-PMF startup?

Match the tool's architecture to your team's constraints. If engineers are scarce and UI changes are frequent, vision-based tools like Docket avoid selector maintenance. If budget allows outsourcing, managed services like QA Wolf handle everything externally. Teams with legacy systems may need enterprise solutions like Tricentis despite higher overhead.

Which testing approach works best for startups with frequent UI updates?

Vision-based testing handles rapid frontend changes better than DOM-based tools. Coordinate-driven systems adapt when buttons move or layouts shift, while selector-based frameworks break with each class name change. This difference matters most when shipping daily or weekly releases.

Can non-technical team members create tests without engineering support?

Natural language tools like testRigor and Docket allow product managers to write tests in plain English. Docket's visual approach lets anyone record flows by clicking through the application. Low-code platforms like Mabl require some technical familiarity but less than script-based frameworks.

What's the difference between managed testing services and automated tools?

Managed services like QA Wolf provide human engineers who build and maintain tests for you, creating dependency on external teams. Automated tools like Docket run autonomously in CI/CD pipelines, giving engineering teams direct control. The trade-off is between hands-off convenience and independent velocity.

When should I switch from manual QA to automated testing?

Switch when manual regression testing consumes more than 10 hours per week or when bugs in core flows start affecting revenue. Post-PMF startups typically hit this threshold when shipping multiple releases per week and the regression surface area exceeds what one person can validate.

.svg)

.svg)

.png)

.png)

.svg)

.png)