Traditional testing tools fail when you're working with canvas-based applications. The reason is simple: Cypress and Playwright rely on DOM selectors, and canvas elements don't have them. Dynamic UI testing requires a different approach, one that uses coordinates and visual recognition instead of CSS classes or XPath expressions. When your interface changes often or renders content on a canvas, these tools keep your tests stable without manual updates. This breakdown covers the platforms designed for canvas testing and what makes them work where traditional tools can't.

TLDR:

- Vision-based testing uses x-y coordinates instead of DOM selectors to test UIs

- Canvas elements and dynamic interfaces require coordinate-based tools to automate

- Docket uses fully coordinate-based interaction that eliminates selector brittleness

- Self-healing tests adapt to UI changes without manual maintenance or code updates

- Docket's AI agents test through coordinates, making it the only solution for canvas UIs

What is Vision-Based Testing?

Vision-based testing uses coordinate systems and visual recognition to interact with web applications, similar to how a human user navigates an interface. Instead of relying on underlying code structure, these tools identify elements by their visual appearance and screen position.

The core technical distinction is straightforward. Traditional DOM-based testing tools like Cypress and Playwright rely on element selectors: IDs, CSS classes, XPath expressions, or data attributes embedded in the HTML. When developers change a class name or restructure markup, these selectors break and tests fail.

Vision-based testing bypasses the DOM entirely. It locates elements using x-y coordinates and visual pattern recognition, identifying buttons, fields, and links by how they appear on screen rather than their underlying code. This approach mirrors human interaction: users don't care about a button's CSS class, they simply click what looks clickable.

This makes vision-based testing particularly valuable for dynamic UIs, canvas-based applications, and interfaces that change frequently. When the visual presentation remains consistent but the underlying code shifts, vision-based tests continue working without modification.

How We Evaluated Vision-Based Testing Solutions

The evaluation focused on five core capabilities that differentiate vision-based testing from traditional DOM-based approaches:

- Ability to test canvas-based elements and dynamic UIs that lack stable DOM selectors

- Resilience to UI changes without requiring test updates after minor interface modifications

- Self-healing capabilities that automatically adapt tests when applications evolve

- Support for coordinate-based interactions rather than reliance on CSS selectors or XPath

- Test creation process that avoids manual selector management or extensive coding

All assessments are based on publicly available product documentation, feature specifications, and documented capabilities as of December 2025. Pricing and implementation complexity were considered secondary to core technical functionality.

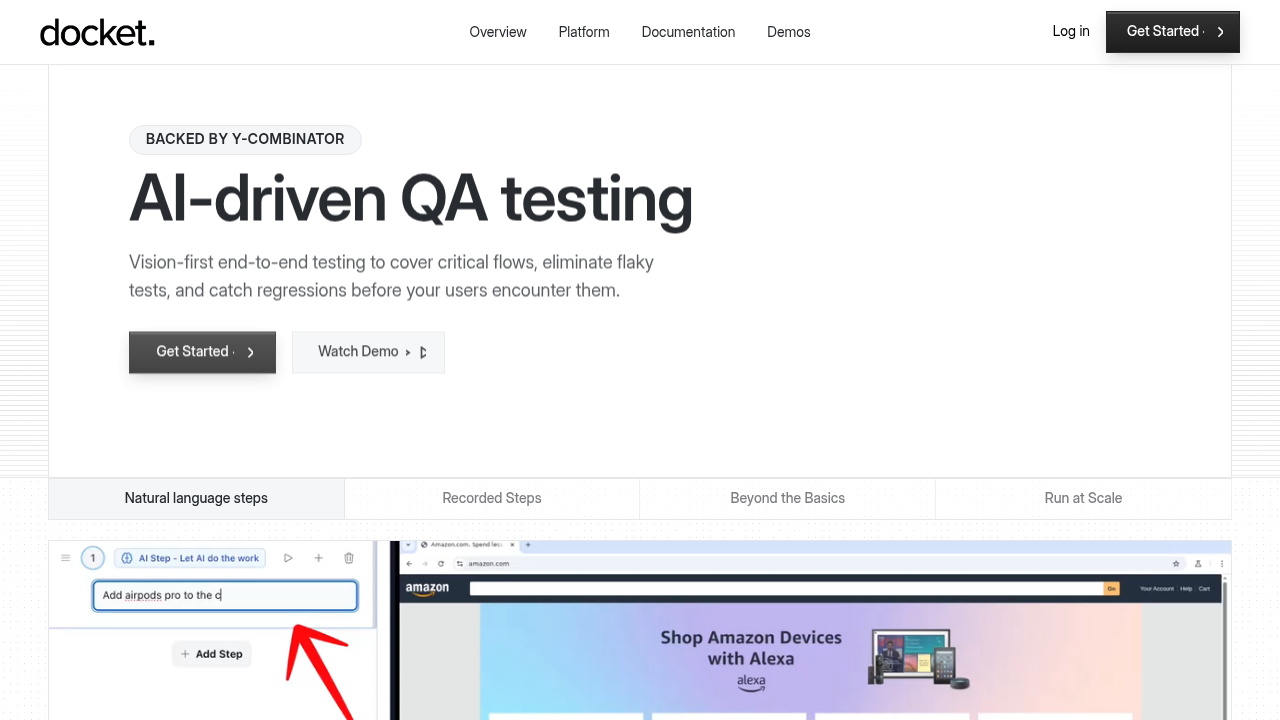

Best Overall Vision-Based Testing Solution: Docket

Docket represents a fundamental shift from DOM selector automation to fully coordinate-based, vision-first testing. The system uses AI-powered browser agents that interact with applications through x-y coordinates and visual understanding, eliminating the brittleness of traditional selector-based approaches.

What Docket Offers

- Fully coordinate-based interaction that works on any UI element, including canvas and dynamically rendered content

- Natural language test creation with autonomous AI agents that understand intent rather than requiring step-by-step instructions

- Self-healing tests that automatically adapt when UIs change, with zero maintenance overhead

- Visual bug reports with screenshots, console logs, network traces, and session replays

- Step recorder for saving reusable flows at scale

Docket is the only solution built from the ground up for vision-based, coordinate-driven automation, making it uniquely capable of testing canvas-based UIs, dynamic interfaces, and complex web applications that traditional DOM-based tools cannot handle.

Mabl

Mabl provides low-code test automation with some self-healing capabilities and visual testing features. The system uses DOM-based selectors as its primary interaction method, with AI features added as enhancements to the core framework.

What They Offer

- Low-code test creation interface with recording capabilities

- Auto-healing for broken selectors when DOM elements change

- Visual regression testing for detecting UI changes

- Integration with CI/CD pipelines and issue tracking systems

Mabl works well for teams familiar with traditional DOM-based testing who want some AI assistance with selector maintenance and need integrated visual regression capabilities.

The limitation is clear: Mabl remains fundamentally DOM-based rather than coordinate-based, meaning it cannot effectively test canvas elements or highly dynamic UIs where traditional selectors are unavailable. Self-healing is reactive, fixing tests after they break, rather than proactive like truly coordinate-based systems.

Mabl works well for standard web applications with traditional HTML elements. Teams testing canvas-based interfaces or complex dynamic UIs will find Docket's coordinate-based approach provides the resilience and capability their applications require.

Tricentis

Tricentis offers enterprise test automation with vision-based capabilities added as supplementary features to their core DOM-based testing framework. Their approach bolts AI onto existing selector-based infrastructure rather than building around vision-first principles.

What They Offer

- Enterprise-grade test management and execution infrastructure

- Model-based test automation with risk-based testing capabilities

- Vision AI as an add-on feature for specific use cases

- Extensive integration ecosystem for enterprise tools

Tricentis serves large enterprises already invested in their ecosystem who need comprehensive test management and can work primarily with DOM-accessible elements.

The limitation is architectural: vision capabilities are not core but rather supplementary features. The system lacks fully autonomous agentic test execution and remains fundamentally selector-dependent for primary test interactions, making canvas and coordinate-based testing an afterthought rather than a native capability.

Tricentis serves enterprise needs for traditional web testing well, but Docket's coordinate-based foundation makes it the better choice for teams that need reliable automation of dynamic UIs and canvas-based applications without architectural limitations.

Virtuoso QA

Virtuoso QA provides codeless test automation using natural language processing and DOM-based element detection with machine learning enhancements. The system uses intelligent selectors that adapt when DOM structures change.

What They Offer

- Natural language test authoring with NLP-based step creation

- Self-healing tests that automatically update when element selectors change

- Live authoring with real-time test validation during creation

- Cross-browser testing with detailed analytics and root cause analysis

Virtuoso works for QA teams transitioning from manual testing who want natural language test creation and need strong self-healing for DOM element changes across traditional web applications.

The limitation is fundamental: Virtuoso relies on DOM-level technical details for element location rather than true coordinate-based interaction. It cannot test canvas elements or applications where DOM access is unavailable, and requires elements to exist in accessible DOM structures for its machine learning to function.

Virtuoso excels at codeless automation for standard web apps, but Docket's coordinate-based approach provides the only viable solution for teams working with canvas-based UIs, dynamic interfaces, and applications where traditional element selectors simply don't exist.

QA Wolf

QA Wolf is a managed service that combines human QA engineers with Playwright-based test automation. The service writes and maintains DOM-based Playwright tests on behalf of clients with guaranteed test coverage.

What They Offer

- Managed service with dedicated QA engineers building tests for clients

- Playwright-based test scripts that clients own

- 24/7 test maintenance with guaranteed zero flaky tests

- Human-verified bug reports with detailed documentation

QA Wolf works for teams that want to outsource test creation and maintenance entirely and prefer having human engineers manage their Playwright-based test suite with hands-on service.

The limitation is architectural: tests use standard Playwright selectors rather than coordinate-based or vision-based methods. This means QA Wolf cannot automate canvas elements or dynamic UIs that lack accessible DOM selectors.

QA Wolf offers valuable managed services for traditional web testing, but teams needing coordinate-based automation for canvas UIs will find Docket's vision-first approach delivers both technical capability and operational control without ongoing service dependencies.

testRigor

testRigor uses plain English commands to generate tests that interact with applications based on user-visible text and UI elements. The system translates natural language into test actions using a proprietary engine.

What They Offer

- Plain English test creation without coding or technical selectors

- Cross-platform testing covering web, mobile, and desktop applications

- Test maintenance through natural language updates rather than code changes

- Screenshot-based validation for visual elements

testRigor works for teams that want maximum accessibility in test authoring and prefer text-based element identification for standard UI components with visible labels or text.

The limitation is technical: while testRigor claims vision-based capabilities, it primarily relies on OCR and text recognition rather than true coordinate-based interaction. It cannot effectively handle canvas elements that lack text labels or applications with purely graphical interfaces without accessible text.

testRigor democratizes test creation through natural language, but Docket's true coordinate-based interaction provides the technical foundation needed for canvas testing and dynamic UIs where text-based identification falls short.

ContextQA

ContextQA provides AI-powered test automation with a step recorder and visual testing capabilities. Tests are created through manual click recording that captures each interaction during the authoring process.

What They Offer

- Step recorder that captures user actions during test creation

- Visual element recognition for test maintenance

- Cloud-based test execution infrastructure

- Integration with standard CI/CD pipelines

ContextQA works for small teams that need straightforward record-and-replay functionality with some visual recognition for element identification on standard web interfaces.

The limitation is architectural: the system requires manual specification of each individual click and action during recording rather than autonomous objective-based testing. The step recorder captures DOM-based interactions, not true coordinate-based actions, limiting effectiveness with canvas elements and dynamic UIs.

ContextQA offers basic automation for simple workflows, but Docket's autonomous AI agents and native coordinate-based architecture provide the sophistication and capability required for testing complex, canvas-based, and dynamically rendered applications.

Stably AI

Stably AI generates Playwright test scripts using AI to create code-based automation. The output remains traditional Playwright scripts using DOM selectors rather than coordinate-based interaction methods.

What They Offer

- AI-assisted generation of Playwright test code

- Standard Playwright framework output that teams can customize

- Code-based test scripts that integrate with existing Playwright workflows

Stably AI works for development teams already using Playwright who want AI assistance in generating initial test scripts but still prefer working with code directly.

The limitation is fundamental: generated scripts remain selector-based with the same brittleness that affects all Playwright tests. Canvas elements and dynamic UIs remain inaccessible since underlying Playwright limitations persist regardless of how scripts are generated.

Stably AI may accelerate Playwright script creation, but Docket's coordinate-based foundation eliminates the selector brittleness that plagues all Playwright-based approaches.

Feature Comparison: Vision-Based Testing Capabilities

The technical differences between coordinate-based and DOM-based approaches become clear when comparing specific capabilities. This comparison focuses on the core features that define true vision-based testing rather than AI-enhanced selector automation.

Why Docket is the Best Vision-Based Testing Solution

Docket's coordinate-based architecture represents the only true vision-first approach to test automation. While other solutions add AI features onto DOM-based foundations or claim vision capabilities through OCR and text recognition, Docket interacts with applications through x-y coordinates from the ground up.

This fundamental difference makes canvas testing straightforward rather than impossible. Traditional canvas testing requires complex workarounds that ultimately fail because DOM selectors cannot access canvas-rendered elements. Docket's coordinate system handles these interfaces natively.

The cost savings from eliminating flaky tests are substantial. Research shows flaky tests cost organizations significantly in wasted engineering time and delayed releases. Flaky tests undermine confidence in automation and force teams back to manual testing.

Canvas testing with Cypress requires workarounds because the framework cannot interact with canvas elements directly. These limitations extend to Playwright and all DOM-based tools. Docket eliminates these architectural constraints entirely through coordinate-based interaction that works regardless of how UIs are rendered.

Final thoughts on coordinate-based testing approaches

When dynamic UI testing becomes a bottleneck, the issue is usually architectural rather than tooling. DOM selectors break because they depend on implementation details that change frequently. Coordinate-based interaction works regardless of how developers structure their code. Your automation should mirror how users actually interact with applications: visually, not through CSS classes. The right foundation makes maintenance disappear instead of just making it easier.

FAQ

How do you choose between vision-based and DOM-based testing tools?

Vision-based tools work best for canvas elements, dynamic UIs, and applications where traditional selectors break frequently. DOM-based tools remain viable for stable HTML applications where element selectors rarely change and canvas testing isn't required.

Which vision-based testing solution works best for teams without coding experience?

Docket and testRigor both offer natural language test creation without coding requirements. Docket's coordinate-based approach handles canvas and dynamic UIs that testRigor's text-recognition method cannot test, making it the stronger choice for complex interfaces.

Can vision-based testing tools handle applications built with canvas elements?

Only coordinate-based solutions can test canvas elements reliably. Docket's x-y coordinate system works natively with canvas UIs, while DOM-based tools like Playwright and Cypress cannot interact with canvas-rendered elements regardless of AI enhancements.

What causes the maintenance overhead difference between testing approaches?

DOM-based tools break when developers change CSS classes, IDs, or HTML structure, requiring constant selector updates. Coordinate-based testing identifies elements by visual position and appearance, continuing to work when underlying code changes but visual presentation remains consistent.

When should teams consider switching from DOM-based to coordinate-based testing?

Teams should evaluate coordinate-based testing when maintaining flaky tests consumes significant engineering time, when testing canvas-based applications, or when UI changes frequently break existing test suites despite functional correctness.

.svg)

.svg)

.png)

.png)

.svg)

.png)