If you're running weekly release testing, you've probably noticed that traditional test automation doesn't scale at this speed. DOM-based selectors break constantly as your application evolves, turning test maintenance into a full-time job. The tools designed for slower release cycles create bottlenecks when you're trying to ship every week. You need tests that heal themselves, not ones that require constant babysitting between deployments.

TLDR:

- Vision-based testing eliminates DOM selector brittleness that breaks traditional tools during weekly UI changes

- Self-healing AI agents adapt automatically to application changes, removing test maintenance overhead

- Docket uses coordinate-based testing to interact with apps like humans do, not fragile CSS selectors

- Teams shipping weekly need regression suites that run fast and stay current without manual updates

- Docket's AI agents perform exploratory testing beyond predefined scenarios, catching edge cases automatically

What is regression testing for weekly releases?

Regression testing validates that new code deployments haven't broken existing features. When teams ship weekly, each release introduces changes that could inadvertently disrupt previously working functionality. A button that worked last Tuesday might stop responding after Friday's update. A checkout flow that processed orders smoothly could fail after a database migration.

This differs from other testing types in timing and scope. Unit tests verify individual functions. Integration tests check how components interact. Regression testing ensures the entire application still works as expected after changes, focusing on protecting what already functioned correctly.

Weekly cycles compress the traditional testing timeline. Teams have days, not weeks, to verify that every critical user flow remains intact before the next deployment.

How we ranked regression testing tools

The evaluation of regression testing tools focused on what matters when deploying code every seven days: speed, resilience, and minimal manual intervention.

Test execution speed determines whether teams can run full regression suites between commits. Tools were measured on how quickly they complete comprehensive end-to-end test runs across critical user flows.

Self-healing capability separates tools that break with every UI change from those that adapt automatically. The best solutions recognize elements by intent and context rather than fragile selectors that require constant updates.

CI/CD integration ease affects adoption friction. Tools that require extensive configuration or custom scripting slow teams down. The ranking prioritized solutions that connect to existing pipelines with minimal setup.

Maintenance overhead ultimately determines long-term viability. Tools were assessed on how much engineering time they consume after initial implementation, particularly when applications evolve rapidly across weekly releases.

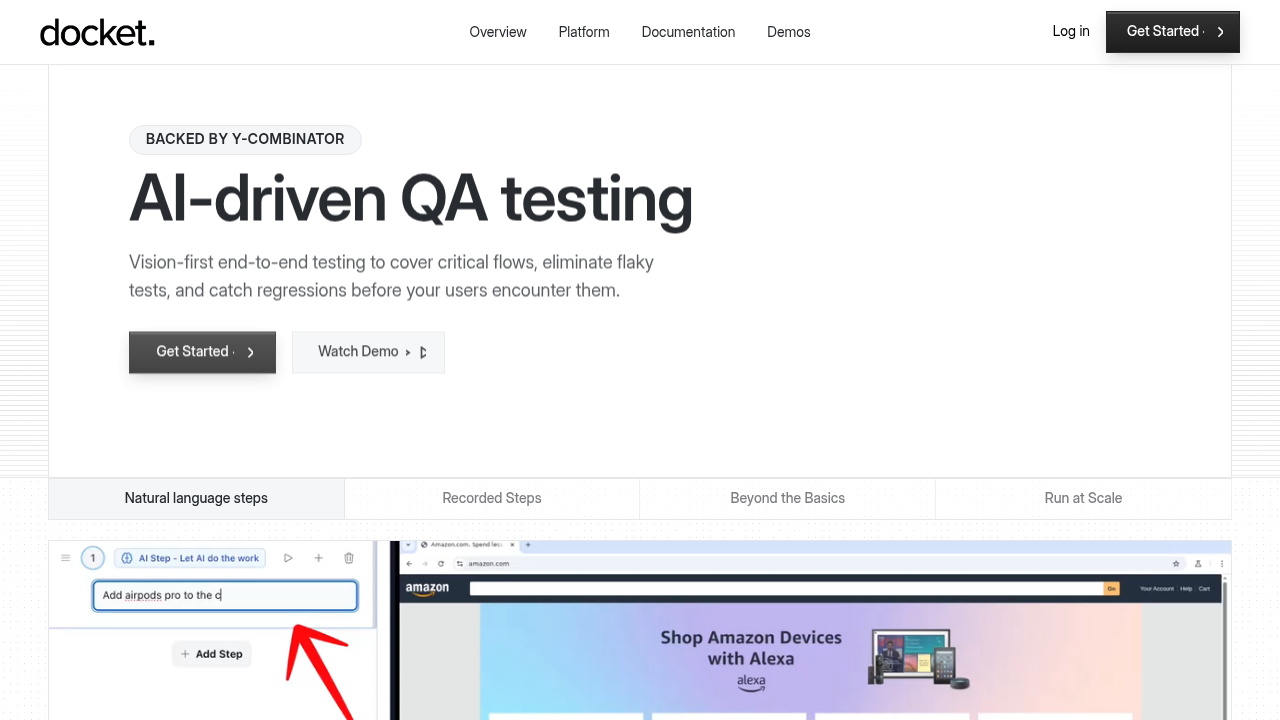

Best Overall Regression Testing Tool: Docket

Docket is a vision-based QA automation solution built for teams that need zero-maintenance regression testing across weekly release cycles.

The core difference lies in coordinate-based testing. Docket eliminates brittle DOM selectors completely, interacting with applications through x-y coordinates and visual recognition. Tests remain resilient to the UI changes that happen constantly during rapid iteration.

Self-healing test suites automatically adapt to application changes without requiring manual updates between releases. When the interface evolves, Docket's AI agents recognize elements by context and intent rather than fragile selectors that break with minor frontend modifications.

Test creation happens through natural language instructions or the step recorder. Teams can build comprehensive regression suites quickly by describing user flows in plain English or clicking through workflows once to capture reusable steps. The approach scales to thousands of stable tests without writing code.

Visual bug reports include screenshots, context, console logs, and network traces. When tests fail, developers receive structured information that accelerates triage during compressed release cycles.

The AI agents perform exploratory testing beyond predefined scenarios, discovering edge cases and issues that static test suites miss. This coverage expands automatically as applications evolve. Tests integrate directly into CI/CD pipelines and run on every commit, providing immediate feedback without blocking deployments.

Docket replaces the constant test maintenance burden with intelligent automation that keeps pace for weekly release testing, allowing engineering teams to ship confidently without dedicating resources to fixing broken tests after every code change.

QA Wolf

QA Wolf is a managed QA service that combines human QA engineers with Playwright-based test automation.

What they offer

- Managed service model with 24-hour test maintenance handled by their team

- 80% automated test coverage built within 4 months using Playwright framework

- Unlimited parallel test runs on their cloud infrastructure

- Zero-flake guarantee through human verification of all test failures

Good for: Engineering teams that prefer outsourcing the entire QA function to a managed service provider rather than building internal automation capabilities.

Limitation: QA Wolf uses vanilla, open source Microsoft Playwright, which relies on DOM selectors that still require manual updates when UI structure changes. The service model means teams depend on their availability and response times rather than having autonomous test execution, which can introduce delays during critical weekly deployment windows.

Bottom line: QA Wolf works for teams willing to outsource testing completely, but Docket offers faster autonomous execution and self-healing tests without requiring external teams to maintain regression suites.

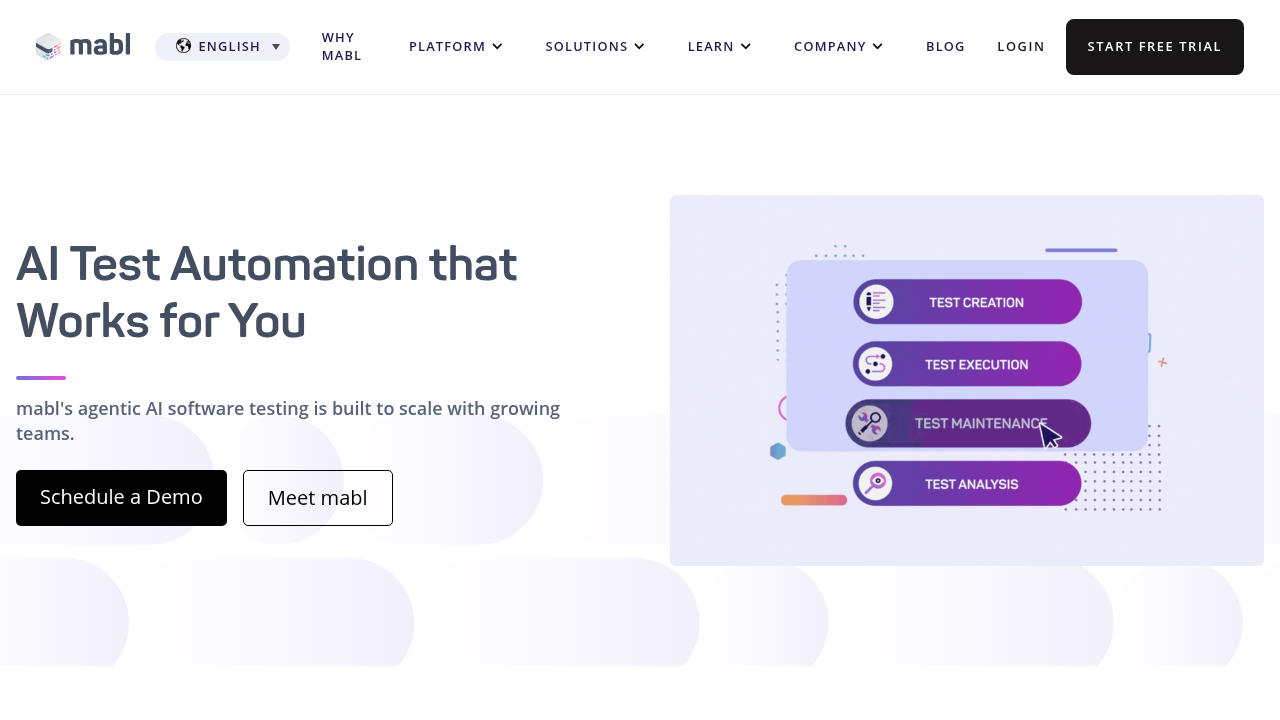

Mabl

Mabl is a low-code test automation solution with machine learning-enhanced element detection.

What they offer

- Visual test recorder that captures user journeys using proprietary domain-specific language

- Auto-healing capability that attempts to adjust tests when elements change

- Cross-browser testing across Chrome, Firefox, Safari, and Edge

- Integration with CI/CD tools and issue trackers like Jira

Good for: QA teams that want a low-code recorder with some auto-healing for relatively stable applications.

Limitation: Tests remain brittle because Mabl fundamentally relies on XPaths, CSS selectors, and element IDs. While machine learning improves selector resilience, the DOM-based identification approach still requires maintenance when UI changes occur. Weekly iterations trigger ongoing test updates that coordinate-based testing avoids.

Bottom line: Mabl offers better selector management than raw Playwright, but Docket's vision-first approach removes selector brittleness completely for teams with aggressive weekly release schedules.

Tricentis

Tricentis provides enterprise test automation with multiple products focused on different testing needs.

What they offer

- Model-based test automation for creating reusable test components

- Integration with enterprise tools and SAP systems

- Risk-based testing to prioritize high-impact test scenarios

- Support for API, web, mobile, and mainframe testing

Good for: Large enterprises with complex legacy systems requiring extensive integration testing across multiple environments.

Limitation: Tricentis relies on DOM-based testing rather than coordinate or vision-based approaches, with AI capabilities added as bolt-ons instead of core functionality. The solution lacks fully autonomous agentic test runs, requiring manual orchestration between releases. For weekly cycles, the configuration overhead and maintenance burden across frequent changes makes Tricentis better suited for quarterly enterprise releases than rapid iteration.

Bottom line: Tricentis handles enterprise complexity effectively, but Docket's AI-native architecture and vision-based approach deliver the speed and autonomy that weekly release cycles require.

Katalon

Katalon offers a test automation solution with both free and enterprise tiers for web and mobile testing.

What they offer

- Record and playback test creation with script editing capabilities

- Built-in keywords for common testing actions

- Integration with popular CI/CD tools like Jenkins and Azure DevOps

- Test analytics and reporting dashboards

Good for: Small to mid-sized teams looking for a free or low-cost entry point into test automation with a familiar IDE-style interface.

Limitation: Katalon uses traditional element locators (ID, XPath, CSS) that break when developers update the DOM structure during weekly releases. The tool requires significant manual script maintenance to keep tests working across frequent changes. It does not offer coordinate-based testing or autonomous AI agents that can adapt to UI changes automatically.

Bottom line: Katalon provides basic automation at low cost, but Docket eliminates the maintenance burden entirely with self-healing vision-based tests designed for continuous deployment.

testRigor

testRigor uses plain English test authoring to make test creation accessible to non-technical team members.

What they offer

- Plain English test commands that translate to executable tests

- Cross-browser and cross-device test execution

- Visual testing for UI regression detection

- Integration with test management and CI/CD tools

Good for: Organizations that want business analysts or product managers to write tests without coding knowledge.

Limitation: While testRigor simplifies test authoring with natural language, it still depends on traditional element identification methods. Tests require updates when application structure changes significantly. It lacks autonomous exploratory testing capabilities that discover edge cases between weekly releases.

Bottom line: testRigor makes writing tests easier, but Docket combines natural language with coordinate-based execution and AI-driven exploration for truly maintenance-free regression testing.

Reflect

Reflect is a no-code test automation tool focused on simplicity and fast test creation.

What they offer

- Visual test recorder that captures user actions in the browser

- Cloud-based test execution with parallel runs

- Scheduled and on-demand test runs

- Integration with Slack, email, and webhooks for notifications

Good for: Startups and small teams that need basic automated tests running quickly without dedicating engineering resources.

Limitation: Reflect relies on DOM-based selectors that require updates when frontend code changes during weekly development cycles. The tool lacks AI-powered self-healing beyond basic selector fallback strategies. It does not provide autonomous test agents that can perform exploratory testing or adapt test flows intelligently based on application changes.

Bottom line: Reflect offers quick setup for basic cases, but Docket's vision-based approach and intelligent test agents provide the resilience and coverage weekly release cycles require.

ContextQA

ContextQA provides test automation with a focus on reducing test creation time through recording.

What they offer

- Test recorder for capturing user interactions

- Support for web and mobile application testing

- Integration capabilities with CI/CD pipelines

- Cloud-based test execution infrastructure

Good for: Teams looking for straightforward record-and-replay functionality with cloud execution.

Limitation: ContextQA requires manually writing out each click action rather than describing objectives for AI agents to achieve. Their approach lacks the autonomous AI-driven steps that can adapt to application changes automatically. Tests built this way need constant updates as UI elements shift during weekly releases, creating maintenance overhead that slows down deployment velocity.

Bottom line: ContextQA handles basic recording needs, but Docket's objective-based AI agents and coordinate testing eliminate the manual click specification and maintenance burden for fast-moving teams.

Feature comparison table of regression testing tools

Why Docket is the best regression testing tool for weekly releases

Weekly releases demand regression testing that keeps pace with continuous change. The best DevOps teams deploy multiple times daily, compressing the timeline for catching regressions before they reach production.

Docket solves the fundamental problem that breaks traditional regression testing during rapid cycles: test brittleness. Coordinate-based vision testing eliminates DOM selectors entirely, so tests continue working as frontends evolve. While software release lifecycles vary across organizations, teams shipping weekly cannot afford the maintenance overhead that selector-based tools require.

The vision-first approach removes the constant test repair cycle. Autonomous AI agents adapt to UI changes automatically, performing exploratory testing that discovers edge cases between releases. Regression suites stay current without engineering intervention, turning testing from a deployment bottleneck into an automated safety net that scales with release velocity.

Final thoughts on regression testing for weekly deployments

Weekly release cycles expose the fundamental weakness of selector-based testing: constant maintenance that slows down every deployment. Continuous deployment testing needs to adapt automatically as UIs change, not break with every frontend update. Docket's coordinate-based approach removes test brittleness completely, so your regression suites stay resilient without engineering intervention. The right testing strategy turns quality checks from a bottleneck into an automated process that keeps pace with your release velocity.

FAQ

How do you choose the best regression testing tool for weekly releases?

Prioritize test resilience and maintenance overhead. Tools that rely on DOM selectors require constant updates as UI changes, while vision-based or coordinate approaches adapt automatically. Evaluate how much engineering time each solution consumes after initial setup, and whether tests can run fast enough to fit between commits during compressed weekly cycles.

Which regression testing approach works better for teams without dedicated QA engineers?

No-code solutions like Docket, testRigor, or Reflect allow non-technical team members to create tests through natural language or visual recording. Vision-based tools eliminate selector maintenance entirely, while plain-English approaches still require updates when application structure changes significantly during rapid iteration.

Can regression testing tools handle exploratory testing beyond predefined scenarios?

Most tools only execute the specific test cases teams manually define. AI-driven solutions can perform autonomous exploration to discover edge cases and issues that static test suites miss, expanding coverage automatically as applications evolve between weekly releases.

When should teams switch from manual regression testing to automation?

Switch when manual testing cannot keep pace with release velocity or when the same critical flows need verification before every deployment. Teams shipping weekly typically cannot manually test all user journeys within compressed timelines, making automation necessary to maintain quality without blocking releases.

What causes regression tests to break after every UI change?

Traditional tools identify elements through DOM selectors (CSS classes, XPaths, element IDs) that change when developers update frontend code. Coordinate-based or vision-first approaches recognize elements by visual context instead, maintaining test stability as interfaces evolve during continuous development.

.svg)

.svg)

.png)

.png)

.svg)

.png)